The OWASP Benchmark for Security Automation (OWASP benchmark) is a free and open test suite designed to evaluate the speed, coverage, and accuracy of automated software vulnerability detection tools and services.

In this article, you’ll learn how to run the OWASP benchmark against Kiuwan Code Security for yourself.

The OWASP benchmark evaluates tools using 4 indicators:

The OWASP benchmark contains 2740 individual tests covering the following vulnerabilities:

| Key | Description | CWE code | Kiuwan Rule |

| pathtraver | Path Traversal | 22 | Avoid non-neutralized user-controlled input composed in a pathname to a resource |

| cmdi | OS Command Injection | 78 | Improper Neutralization of Special Elements used in an OS Command (‘OS Command Injection’) |

| xss | Cross-site Scripting | 79 | Improper Neutralization of Input During Web Page Generation (‘Cross-site Scripting’) |

| sqli | SQL Injection | 89 | Improper Neutralization of Special Elements used in an SQL Command (‘SQL Injection’) |

| ldapi | LDAP Injection | 90 | Avoid non-neutralized user-controlled input in LDAP search filters |

| crypto | Risky Cryptographic Algorithm | 327 | Weak symmetric encryption algorithm |

| hash | Reversible One-Way Hash | 328 | Weak cryptographic hash |

| weekrand | Use of Insufficiently Random Values | 330 | Standard pseudo-random number generators cannot withstand cryptographic attacks |

| trustbound | Trust Boundary Violation | 501 | Trust boundary violation |

| securecookie | Sensitive Cookie | 614 | Generate server-side cookies with adequate security properties |

| xpathi | XPath Injection | 643 | Improper Neutralization of Data within XPath Expressions (‘XPath Injection’) |

You will need a computer that meets the following requirements:

Ensure that the JAVA and MAVEN environment variables are set and in your PATH. To confirm that this has been done, open a command prompt and execute the following commands:

> java -version

> mvn -version

Download the latest release of the OWASP benchmark from https://github.com/OWASP/Benchmark.

Extract this file to a local folder on your computer. For this article, we’re going to use the folder name Benchmark-master as the destination for the unzipped benchmark.

You will need a paid or trial Kiuwan account to run the OWASP Benchmark.

If you are a current Kiuwan Code Security user, you can run the Benchmark in your existing account, so continue to the section Execute the Benchmark Analysis.

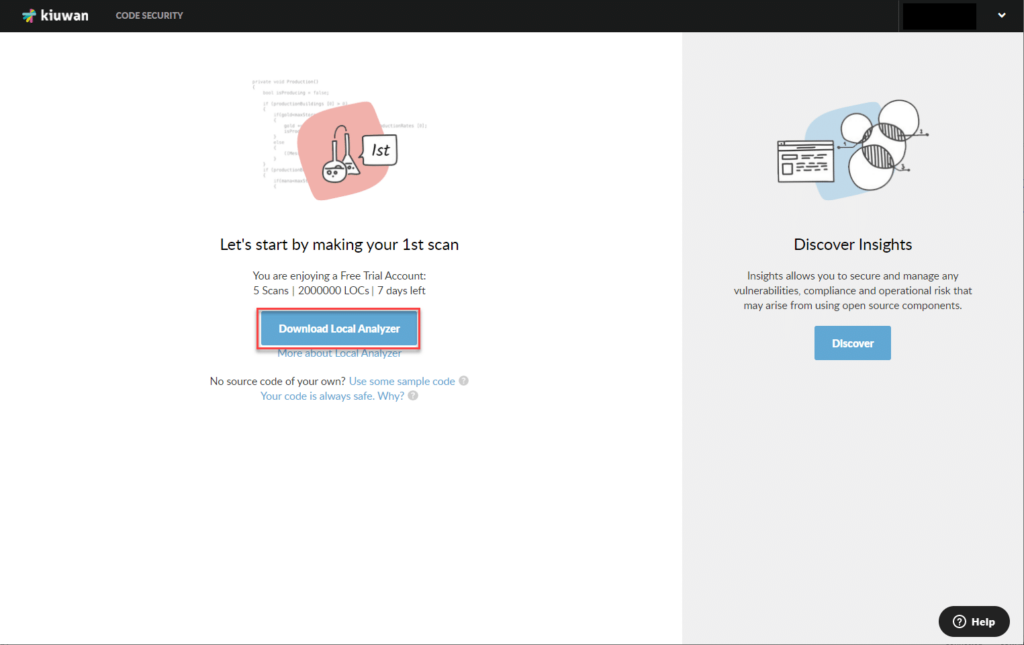

Your very first scan with Kiuwan is run using the Local Analyzer, as described below. If you would like to repeat the benchmark after running your first scan, you can use either the Local Analyzer or the Cloud Analyzer.

Please note that a trial account is limited to scanning a single application. Once you have used a trial account to perform the OWASP Benchmark, you will not be able to use it to scan a different application.

To start the Kiuwan Local Analyzer (KLA), open the folder where you extracted the .ZIP archive, and execute the file kiuwan.cmd.

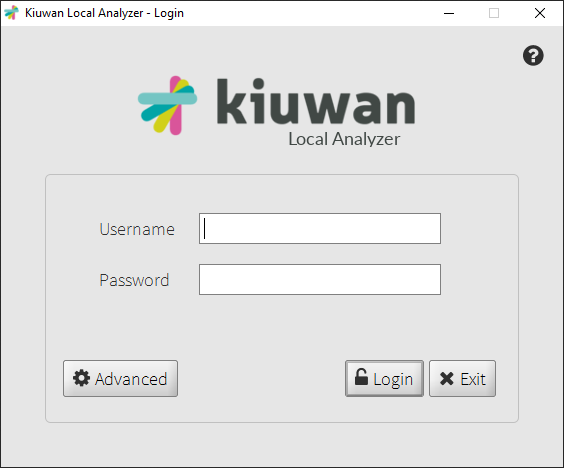

If this is the first time that you have used KLA, you will see a welcome message. Click Let’s Go. Then, the Login window appears.

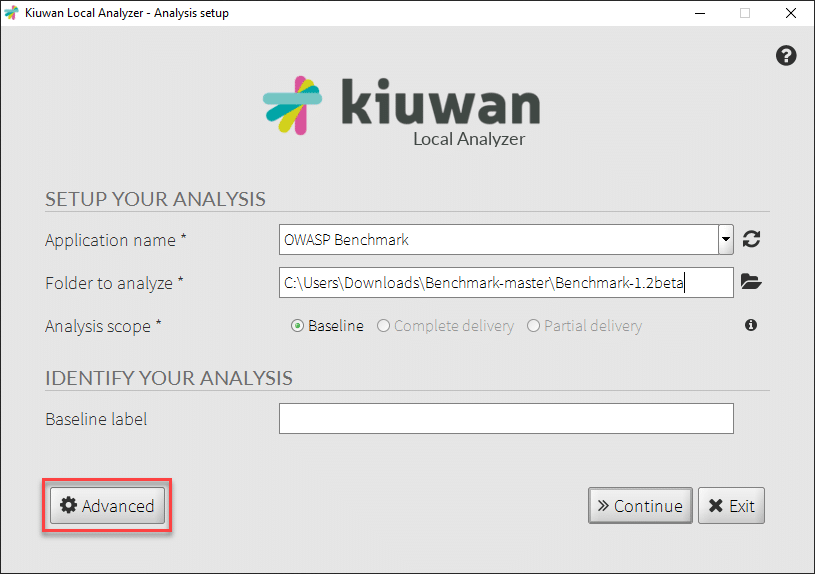

Log on with your Kiuwan username and password. The Set Up Your Analysis dialog appears, as shown below.

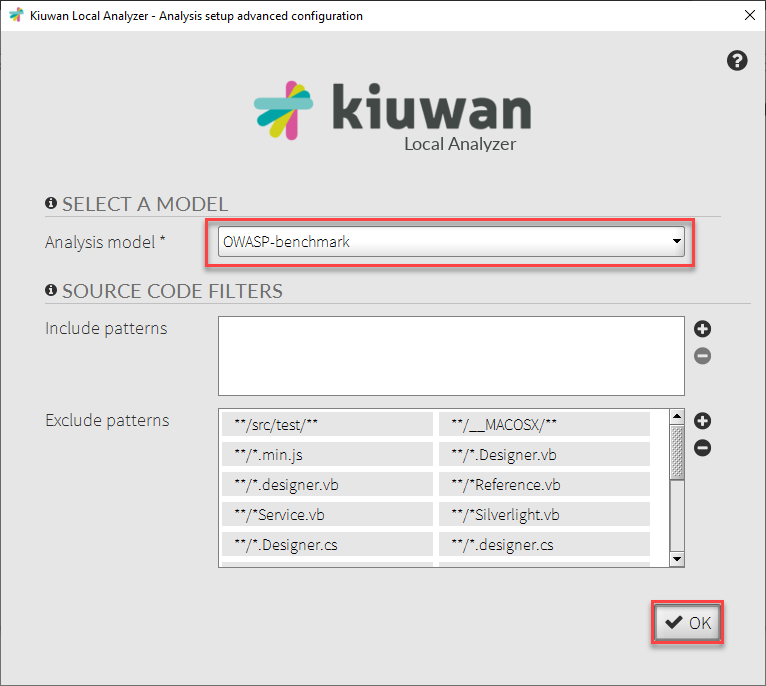

In the Advanced Configuration window, use the Analysis model drop-down to choose OWASP-benchmark.

Click OK; then click Continue.

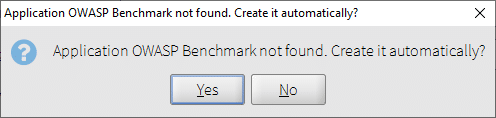

The first time that you scan the OWASP Benchmark application, you will see a prompt to create the application.

Click Yes. Then, click Analyze to start the analysis.

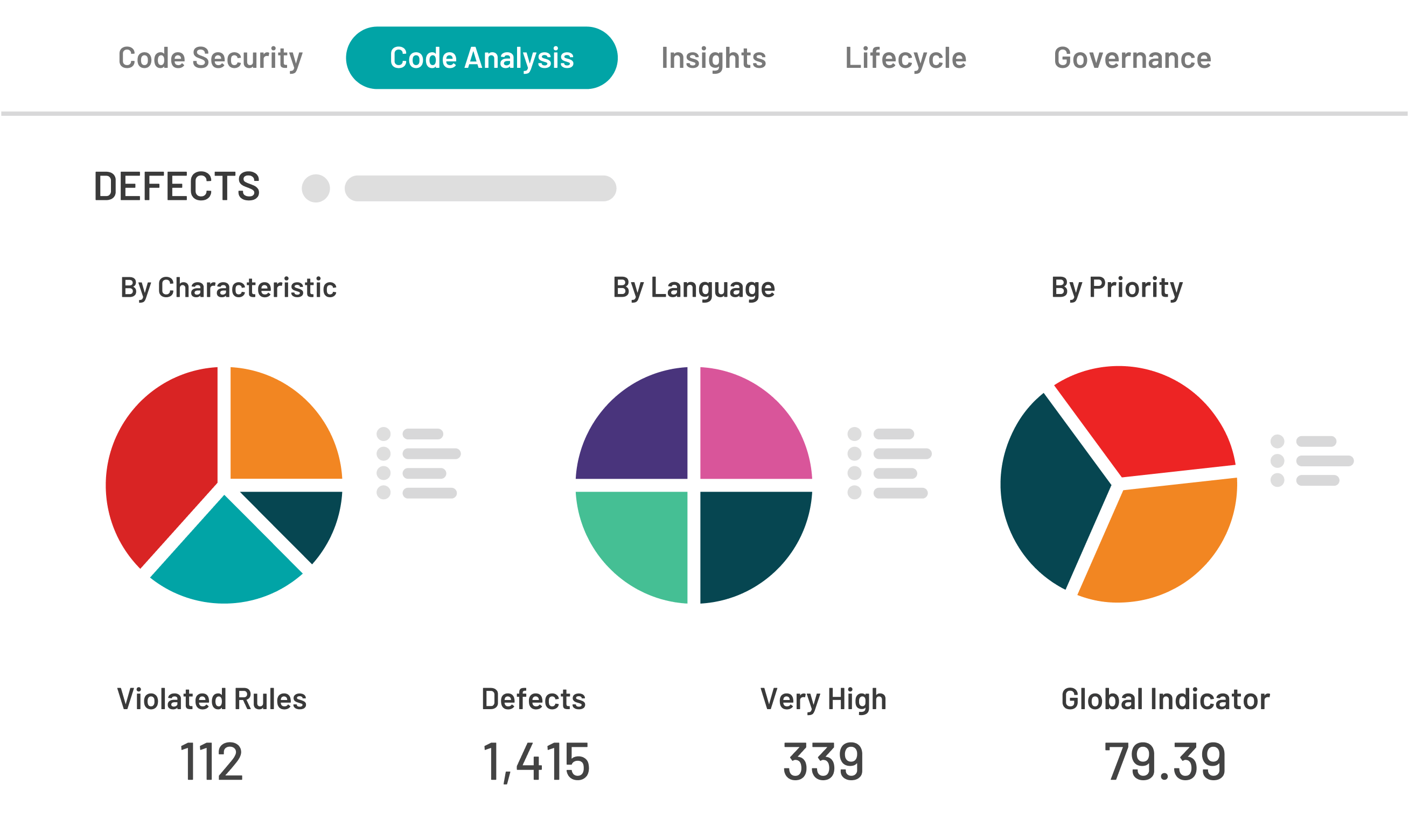

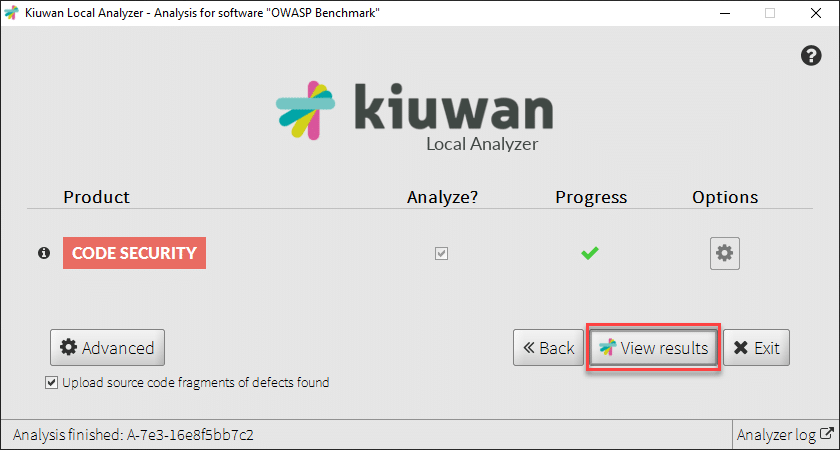

Once the analysis completes, click View Results. The Kiuwan dashboard appears with the results of the scan.

Continue to the directions below to see how to export the results and generate the OWASP Benchmark scorecard.

If you are not already logged on, open the Kiuwan website. In the top menu bar, click Log In.

Enter your Kiuwan username and password, and then click Log in. The Kiuwan dashboard appears.

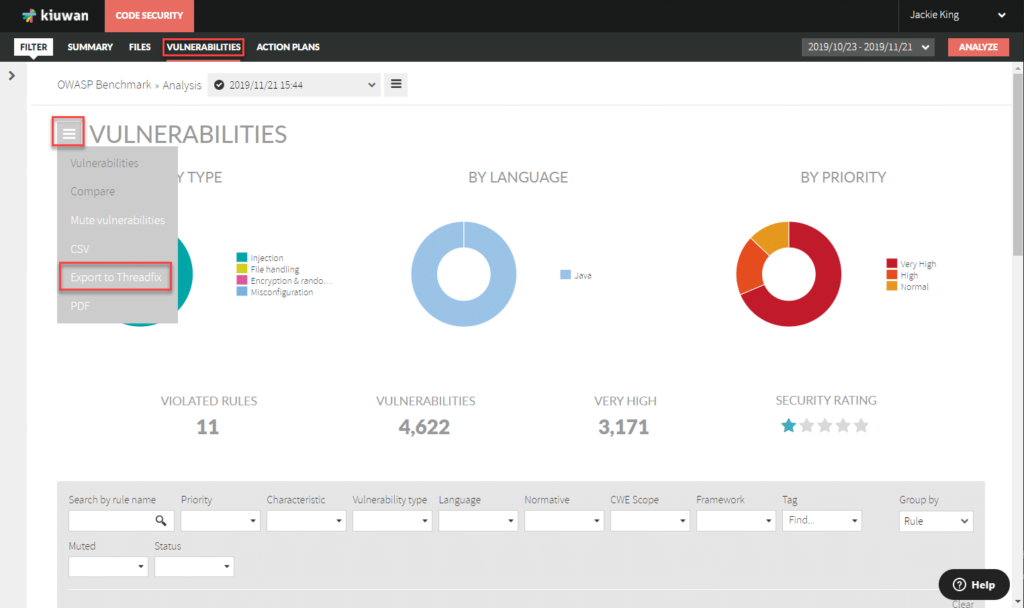

From the top menu choose Vulnerabilities. Click the menu icon next to the title “VULNERABILITIES”, then choose Export to Threadfix, as shown above. This will download a results file with a name similar to “126127_2019-11-21 22_44_08.0_Vulnerabilities.threadfix”

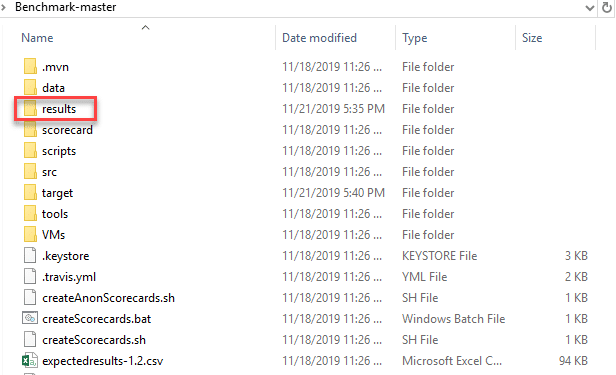

On your local computer, open the location where you downloaded the .threadfix file. Copy this file.

Then open the location where you unzipped the OWASP benchmark (e.g. “Benchmark-master), and paste the .threadfix file into the results folder.

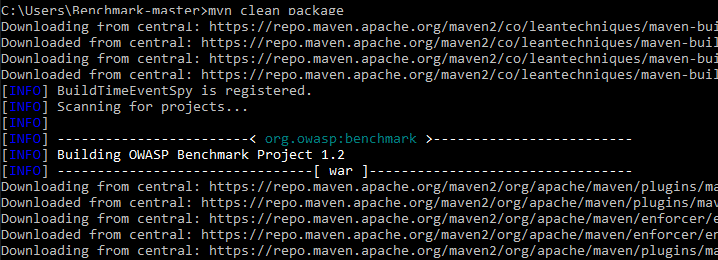

Open a command window.

Navigate to the directory containing the benchmark file (e.g. “Benchmark-master”).

Execute the command >mvn clean package

This process may take several minutes to complete.

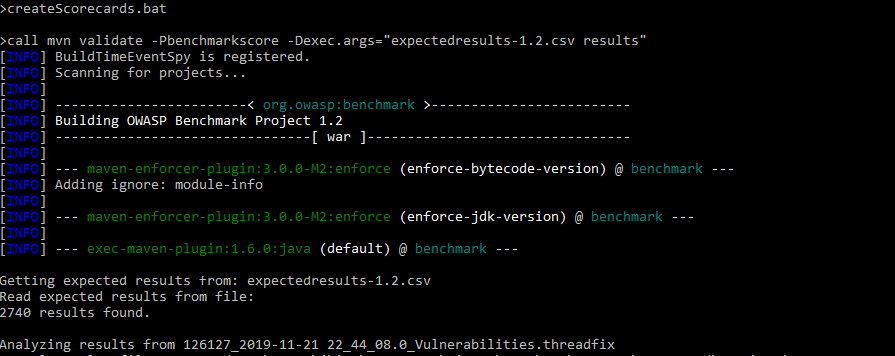

When it finishes, execute the command >createScorecards.bat. This command generates results as HTML and PNG files, and stores them in the scorecard folder of your unzipped Benchmark-master folder.

When execution completes, you will find a report for each vulnerability type and a report for each analysis engine evaluated in the scorecard folder. Close the command window.

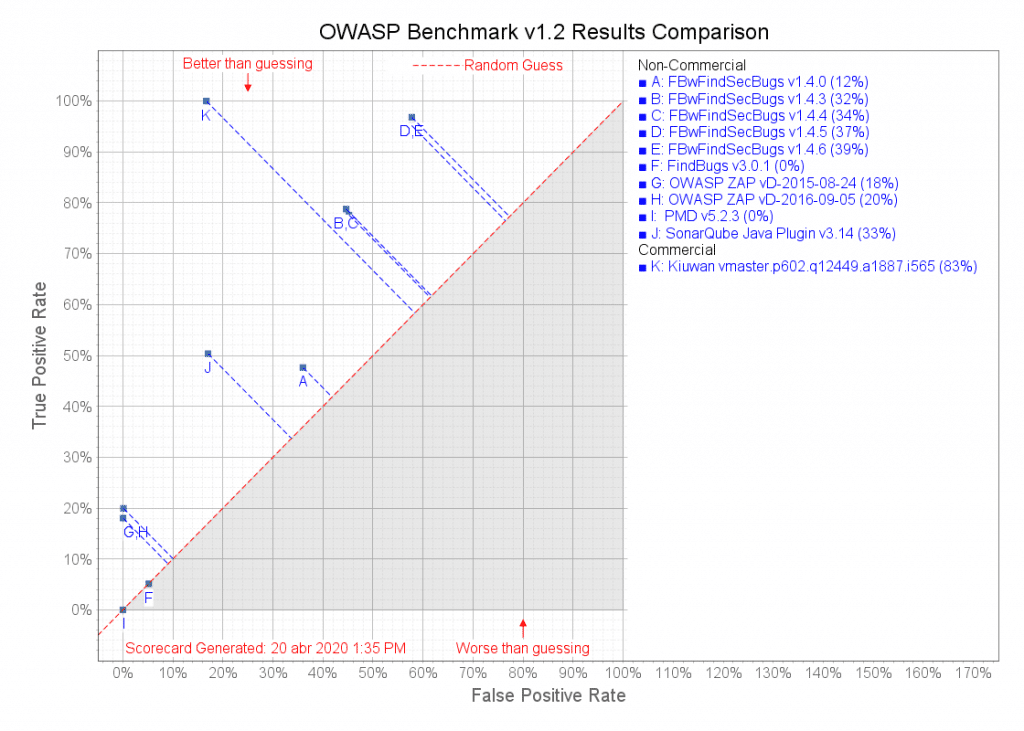

To see the results for each tool in the benchmark, including Kiuwan Code Security, open the scorecard folder and locate the file benchmark_comparison.png. This file contains a benchmark results chart similar to the one shown below.

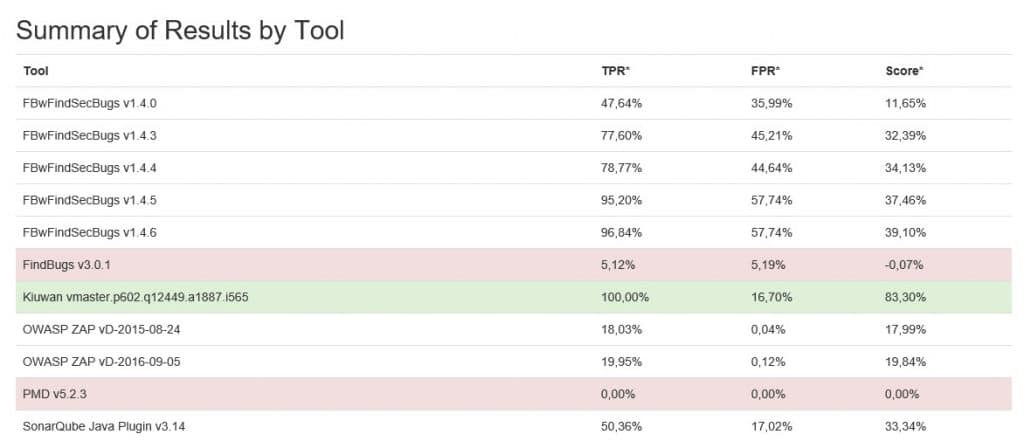

Results for Kiuwan are at point “K” in the graph. These are from an April 2020 analysis of Kiuwan Code Security using the latest published version of the OWASP benchmark. Kiuwan scored a True Positive Rate (TPR) of 100% and (False Positive Rate) of 16,7%, for an overall score of 83,3%, as shown in the table below. By comparison, the next-highest tool in the chart (at point E) has a score of 39%.

The OWASP benchmark uses code generated for testing purposes. Each test case in the benchmark is designed to verify a specific flaw, regardless of how common or rare that flaw might be in the real world. Therefore, results of the benchmark are useful for comparing static analysis tools, but are not necessarily a good predictor of how well a SAST tool will detect vulnerabilities in “real” code.

False positives that Kiuwan reports can be grouped into two categories: collections (sets, lists, and maps) and control flow statements.

Kiuwan does not evaluate each element in a Collection individually, since as a static analysis tool, it does not execute the insertions and deletions of elements in the collection.

So, when an ‘unsafe’ element is inserted in the collection, the whole collection is considered unsafe.

Thus, in a test like the one shown below, Kiuwan finds a ‘command injection vulnerability’, although it really does not exist, and therefore is a False Positive.

String bar = "alsosafe";

if (param != null) {

java.util.List valuesList = new java.util.ArrayList( );

valuesList.add("safe");

valuesList.add( param );

valuesList.add( "moresafe" );

valuesList.remove(0); // remove the 1st safe value

bar = valuesList.get(1); // get the last 'safe' value

}

Process p = r.exec(cmd + bar, argsEnv);To correctly detect these vulnerabilities, a DAST or IAST tool is needed, which Kiuwan is not.

As in the previous example, the evaluation of the condition in a control flow statement cannot always be done with a static analysis tool.

String bar;

String guess = "ABC";

char switchTarget = guess.charAt(1); // condition 'B', which is safe

// Simple case statement that assigns param to bar on conditions 'A', 'C', or 'D'

switch (switchTarget) {

case 'A':

bar = param;

break;

case 'B':

bar = "bob";

break;

case 'C':

case 'D':

bar = param;

break;

default:

bar = "bob's your uncle";

break;

}

request.getSession().putValue( bar, "10340");Kiuwan resolves simple evaluations in the control statement. In other words, all paths in the control flow are evaluated as a simple path, and all assignments from user input are considered to be “unsafe.” This also can produce False Positives in the Kiuwan results.

For the OWASP guide to interpreting the results, refer to the file “OWASP_Benchmark_Guide.html” in the scorecard folder.

To understand a bit more about the OWASP benchmark and how Kiuwan performs with it, read our previous post: The OWASP Benchmark & Kiuwan. Feel free to comment on it and the little “twist” we’ve given to the benchmark as described in the post.